Introduction

Activation functions are most important function in neural networks. These functions find out whether a neuron should be “activated” or not based on the input signals it receives,

ie. Whether the neuron should fire or not. This process is very crucial for introducing non-linearity into the network, which allows it to learn and model complex patterns of data like simple linear relationships. Activation functions introduce non-linearity into the system, allowing neural networks to learn and solve complex tasks

Basic Concept

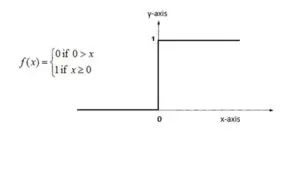

Activation is a method that analyzes an incoming signal and determine whether the signals are strong enough to trigger a neuron’s response.

– Input (x-axis): Show the strength of the incoming signals.

– Output (y-axis): Show the neuron’s state—whether it is activated or not.

In simple terms:

– When the input is low, the neuron is remains inactive (output close to 0).

– As the input increases and crosses a certain valueor threshold, the neuron activates (output close to 1).

check the below image

You can visualize this as moving from a “zero” state (inactive) to a “one” state (active), this is similar to a binary system that can be easily interpret by computer.

However, such a strong jump from 0 to 1 can pose challenges.

Challenges

The simplest form of activation function is binary (either 0 or 1), but this abrupt change can cause two main issues:

1. Mathematical Optimization:

– It is difficult to handle such sudden changes, or discontinuities, in mathematical equations.

– It hard to find the optimal solution for Optimization algorithms (like gradient descent) because it struggles with non-smooth functions.

2. Lack of Real-World Representation:

– In many real-world scenarios (social systems, business models, behavioral patterns, technological changes), transitions are gradual rather than abrupt.

– We know this thing that real-world changes occurs slowly and continuously, rather than in sudden jerks. So, we need an activation function that can swop smoothly between the two states and also offer flexibility to change gradually or quickly based on the input.

Need for Smoother Activation Functions

To overcome the limitations, we use more sophisticated functions that can capture both gradual and sudden changes.

These functions allow the network to adjust its behavior dynamically, providing flexibility in how it models complex patterns.

Examples of Activation Functions

1. Sigmoid Function:

– Smoothly maps input values to a range between 0 and 1.

– Formula: Sigmoid(x) = 1 / (1 + e^(-x))

– Useful for models where we need a probability output.

2. Tanh (Hyperbolic Tangent) Function:

– Similar to Sigmoid but outputs values between -1 and 1.

– Formula: Tanh(x) = (e^x – e^(-x)) / (e^x + e^(-x))

– Centers the data, making optimization easier.

3. ReLU (Rectified Linear Unit) Function:

– Outputs 0 for negative inputs and the input value itself for positive inputs.

– Formula: ReLU(x) = max(0, x)

– Efficient and widely used in hidden layers due to its simplicity.

4. Softmax Function:

– Converts a vector of scores (logits) into probabilities that sum to 1.

– Often used in the output layer of classification models.

– Formula: Softmax(x_i) = e^(x_i) / Σ(e^(x_j))

Applications of Activation Functions in Real World

-

Image Recognition → ReLU for feature extraction, Softmax for classification

-

Natural Language Processing (NLP) → Tanh and ReLU in LSTMs, Transformers

-

Medical Diagnosis AI → Sigmoid for binary outcomes (disease vs. no disease)

-

Speech Recognition → Softmax for word prediction models

Conclusion

Activation functions play an important role in neural networks by introducing non-linearity, enabling the network to learn complex data patterns.

Instead of selecting a simple binary activation, modern functions such as Sigmoid, Tanh, ReLU, and Softmax allow for gradual transitions, making them better for real-world applications where changes are often continuous rather than abrupt.

By choosing the right activation function, we can enhance the performance of neural networks, making them more powerful and versatile in solving sophisticated tasks.

Check out more Blogs – Click here

Visit LinkedIn page – Click Here